As part of our recent Digital Humanities Doctoral School's programme, participants were asked to write a blogpost capturing their experiences with the digital humanities. In this week's blogpost, we welcome Melina Jander, a PhD student and research assistant in the Early Career Research Group eTRAP (electronic Text Reuse Acquisition Project), University of Göttingen. From September - November 2017, Melina came to the Ghent Centre for Digital Humanites, with a U4 fellowship, which supports young researchers to gain international experience and to network with researchers. Melina's academic background is in German Philology with a focus on German Literature. She also has a Bachelor's Degree in Cultural Anthropology.

100 Years of Dystopian Novels: A Literary Computational Analysis of Core Primitives

What would it be like to live in the worst possible version of the world? How would such a bad place look like? And could we make this place any better?

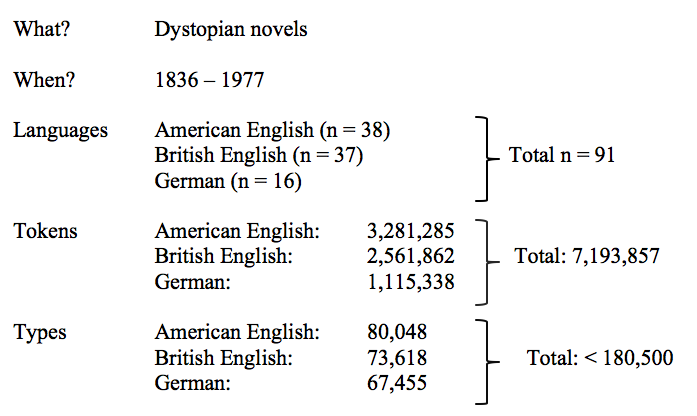

These questions, among others, are asked and discussed in the literary genre dystopian fiction. While the emergence of the dystopian concept can be dated back to Thomas More’s novel Utopia (1516), the term dystopia was only coined in 1868 by British philosopher John Stuart Mill (Sisk 1997). That year serves as a chronological starting point for the research project contemplated here and plays a significant role for the literary corpus that has been put together: based on literature about dystopian novels in the 19th and 20th century, 91 novels have been selected to form the corpus of investigation. The works’ origins lay in England, America and Germany. These three regions and languages have been selected due to dystopia’s popularity in the two former countries, and due to the genre being ‘underrepresented’ in the German literature during these centuries (Campbell 2014). Putting a research corpus together can be a sensitive act. Not only the questions of different languages and the time span are of core significance, but also the accessibility of the chosen texts, as well as the quality of the data and, for obvious reasons, the laws of copyright. Taking all these factors into account, we (so far) can present a corpus that carries the following information:

Within a few years, a human being can read 91 novels, even when reading is just a hobby and not part of his or her profession. But can a human read 91 novels within three years (the estimated time for the research project) AND answer the questions stated above? Probably not. Part of the afore mentioned questions come down to concepts, or topics, depicted and discussed in the novels: the oppressive power of a totalitarian regime, surveillance, the loss of privacy, environmental crises and great wars are only a few of the long list of society’s anxieties that are dealt with in these works of fiction (Mohr 2005; Geef 2015).

Since we accept that a manual investigation of the corpus put together for this project cannot be exhaustive, computational methods are and will be used to explore the 91 novels. As technical starting points, the methods of text reuse detection and topic modelling are considered useful. While text reuse detection—for instance, with the software TRACER—spots written repetition and borrowing of text by ‘looking at them’ side-by-side’ (Franzini et al. 2016), topic modelling—first steps can easily be performed with MALLET—is a statistical method which clusters words in a set of documents (Blei 2012). Combining these two strategies could shed some light on which topics are present in the 91 novels and how certain concepts might have been reused or developed over time.

First experiments show that those two techniques raise important questions on the applicability of computational methods from a humanist’s point of view. To investigate this question and to be able to formulate solid critiques on the applicability of certain computational approaches, several software and technical ideas for text analysis shall be investigated in the near future.

References

Blei, D. M. (2012): Probabilistic Topic Models. Surveying a suite of algorithms that offer a solution to managing large document archives. In: Communications of the ACM, Vol. 55(4), ACM, New York (pp. 77-84).

Campbell, B. B. (2014): Detectives, Dystopias, and Poplit. Studies in Mordern German Genre Fiction. In Campbell, B. B.; Guenther-Pal, A.; Rützou Petersen, V. (eds.): Studies in German Literature, Linguistics, and Culture, pp. 29-88.

Franzini, G., Franzini, E., Büchler, M. (2016): Historical Text Reuse: What Is It?

Geef, D. (2015): Late Capitalism and its Fictious Future(s).

Mohr, D. (2005): Worlds Apart? Dualism and Transgression in Contemporary Female Dystopias. Jefferson, NC: McFarland (pp. 11-40).

Sisk, D. W. (1997): Transformations of Language in Modern Dystopias.